Hello, Blogger aaj mai aapko

bataunga ki robots txt file ko kaise apne blog me add karate hai aur isse add

karane ke kya fayade hai. To chaliye janate hai.

Robots.Txt File Kya Hai?

Agar aap blogging me naye hai to

aapko ye post dyan se padhani hai.

Agar aapko apne site ya blog ko

search engine ke result me lana hai to aapko robots.txt ke bare me pata hona

chahiye.

Bahut se new blogger blog banate hai aur kafi acchi post bhi dal dete hai phir bhi unhe search engine

se traffic nahi milati yesa kyon hota hai. Kyon ki aapne apne site me

robots.txt aur kuch other setting nahi ki hoti.

Robots.txt search engine robots

ko command dene ka kam karata. Jise search engine robots ko apki site ke page

aur post ko browse karane me madad hoti hai. Search engine robots apki site ko

crawl aur index karate hai.

Robots.Txt Work Kaise Karati Hai?

Agar man le hume apni site pe se

kuch pages ko search engine me nahi dikhana hai jaise

www.hindimeearn.com/about_me.html

To ise hum robots.txt ki file ki

madad se disallow kar sakate hai.

Robots.txt file kuch is tarah ki

hoti hai.

Useragent: Mediapartners-Google

Disallow:

Useragent:*

Disallow: /search

Allow: /

Sitemap: http://yourblogname.com/sitemap.xml

Isme jo code hai usko samajate

hai

Agar aap ne sitemap nahi banaya

hai to yaha pe click kare aur apni

site ka url dale aur jo niche image me setting di hai wahi setting kar le.

User Agent: Mediapartners-Google

Iska matlab agar aap aapne site

pe google adsense use kar rahe hai to google bots apke site url ko check

karenge.

Disallow:

Iski madad se aap search engine

ke bots ko url crawl karane se rok sakate hai.

Disallow: /search:

Iski madad se aap specific

folder, ya label ko carwl karane se rok sakate hai.

e.g. www.mysite.com/search/label/makemoney

User Agent:

Sabhi search engine (google,

yahoo, yandex) ke agent’s yane robots.

Allow: /

Sabhi pages ko crawl karane ki

robots ko permission deti hai.

Sitemap:

Apki site ya blog ka sitemap. Ye

sabse important hota hai kyon ki robots yaha se apne site ke url aur sabhi post

ko index karate hai.

Agar aap kisi page ko search

engine me disallow karane chahate hai to upar ke code me sirf aapko is tarah

badal karana hoga.

Disallow: /p/about_us.html

Apne Site Ya Blog Me Robots.Txt File Kaise Add Kare:

Step 1:

Blogger.com ki madad se apne

blog ke dashboard me chale jaye.

Step 2:

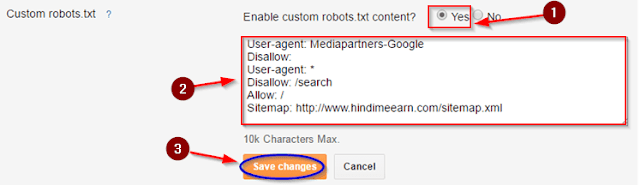

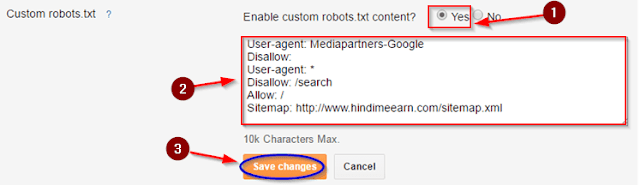

Phir Niche Setting => Search

Preferences=> Crawlers and indexing => Custom robots.txt => Edit =>

Yes.

Step 3:

Maine niche jo code diya hai use

copy karle aur usme apni site ka url dal de.

Step 4:

Code ko paste kare aur save pe

click kar de.

Aapne robots.txt file ko add kar

diya hai. Ab is file ko check karane ke liye aap aapke browser me www.yoursitename.com/robots.txt

type karke dekh sakate hai.

To dosto aaj humane dekha ki

kaise hum robots.txt file ko apne blog me add karate hai aur iske faydade kya

hai.

NOTE: Agar robots.txt file me aapne koi galat code use kiya to aapki

site search engine ignore kar denge aur aapki site search engine me nahi aayegi.

So robots.txt file ko bade dyan se add kare.

Agar aapko is post se realated

koi bhi problem ho to niche comment karana na bhule.

Haapy Blogging J

nice thanks for sharing mene abhi custom robot mere blog me add kiya

ReplyDelete